Esperanto Newspaper Excerpts

The main goal of the CLARIAH-AT project Esperanto Newspaper Excerpts was to create complete texts for newspaper articles from the Hachette collection. This collection contains ca. 17,000 articles about Esperanto from the period 1898 until 1915, which are being held at the Department of Planned Languages of the Austrian National Library (ONB). In this topic we want to present the starting point, progress and results of the yearlong research project.

Contents:

1. Starting point and motivation

The Department of Planned Languages of the Austrian National Library – which emerged from the Esperanto Museum founded in 1927 – has extensive holdings on Esperanto and other planned languages. Planned languages are languages deliberately created according to certain criteria. These are studied in interlinguistics, a branch of linguistics.

The Hachette collection

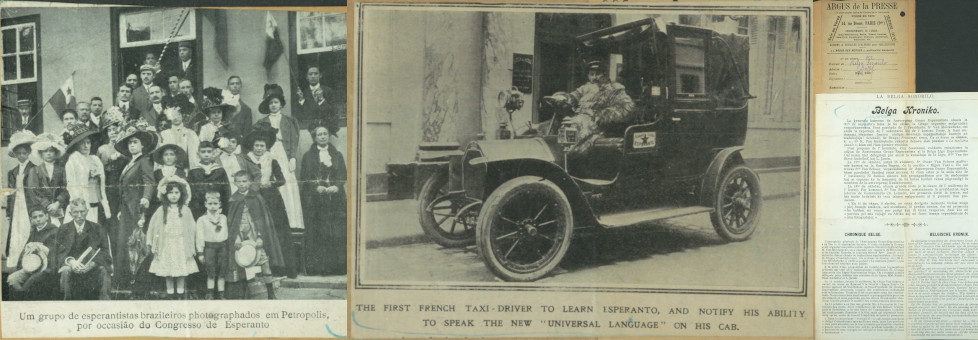

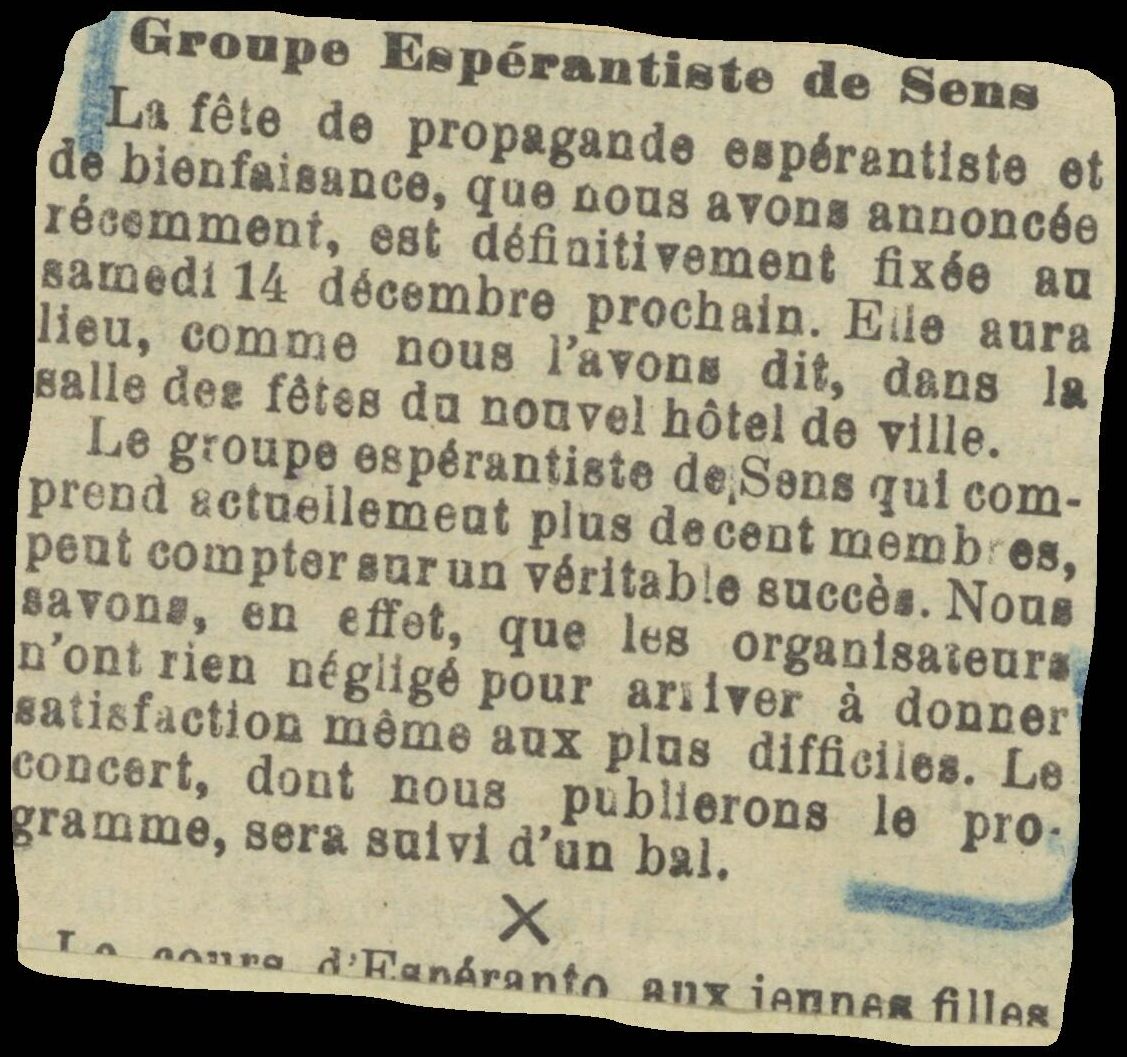

The collection of newspaper cuttings processed in this project consists of around 17,000 articles taken from magazines published in many different European countries between 1898 and 1915. The articles themselves report on events and people related to Esperanto, e.g. reports from Esperanto World Congresses, and therefore provide an excellent and unique opportunity to study the history of the Esperanto movement in Europe in the early 20th century.

In addition to the digitized data created at the ONB for each newspaper article, there is already metadata created by staff of the Department of Planned Languages. This includes information on the title, author, journal, publication date, location and language (and in some cases keywords) for each newspaper article.

Research questions

There is great interest in this period and collection among interlinguists (e.g. from researchers in Princeton, St. Andrews, Berlin and Vienna). There is a particularly interesting part of the collection: the 6,632 articles published in French newspapers are extensive in their (almost) complete coverage. As the articles in the collection are sorted chronologically and by place of publication, it is possible to extract this part from the larger set. It is then possible to answer research questions about names of people, places, associations and other entities using the full texts of the newspaper articles.

As a result of the project, this important collection of Esperanto articles will become searchable, which will be of great value to the research community and anyone interested in Esperanto and this period in particular. We will open the collection to further research as the text becomes accessible for analysis using digital methods (NLP, NER, TF/IDF, etc.). Finally, we will complement the collection by moving from simple images to images with text files and metadata in one place.

Application and funding

At the time of our planning, we became aware of the Funding Call 2022 (“Interoperability and reusability of DH data and tools”) of the CLARIAH-AT consortium, which aimed to support the promotion of interoperability and reusability of tools, methods and research infrastructures in line with the Digital Humanities Austria Strategy 2021+ and the strategic goals of the CLARIN ERIC and DARIAH-EU infrastructure consortia.

We found that our project idea was a very good fit for this call and wrote and submitted our application accordingly. To our great delight, it was accepted and the project was supported for one year with half a developer position.

2. Pipeline and technologies used

Overview of the pipeline

A pipeline was developed in the project with which full texts could be extracted from the original images. Roughly speaking, this pipeline consists of four steps:

- Segmentation of the images belonging to an article into individual text boxes

- Rotating the boxes so that the text is horizontal

- Applying Tesseract to each box for text recognition

- Creating a IIIF manifest per article from the generated ALTO-XML files

Article segmentation with YOLOv8

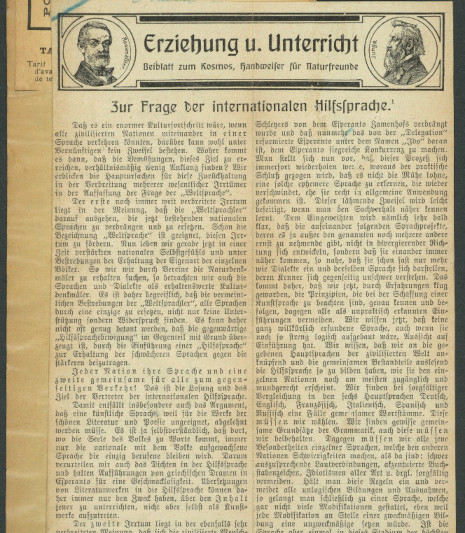

For the layout analysis task, we use the YOLOv8 model (see here for more information), which is a powerful model for common tasks such as object recognition, image classification and segmentation. To improve the quality of layout recognition, a fine-tuning was performed with manually annotated images. The model was then applied to all 22,247 images in the dataset and all areas annotated with “text” were passed on to the next stage. See Figure 1 for a visual representation of the data generated in this step.

Straight rotation of the text boxes

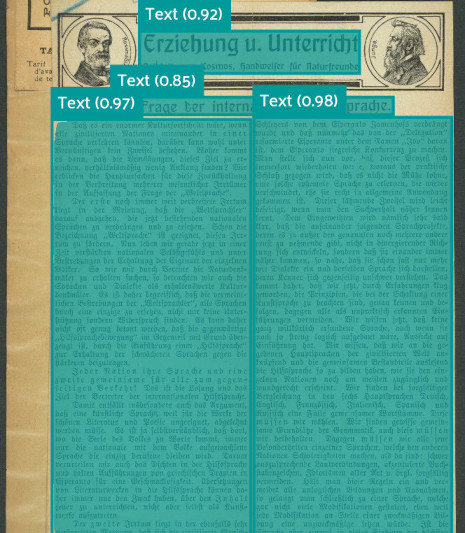

A very typical problem with the newspaper clippings in this collection is that the clippings are not glued parallel to the edges of the paper (and sometimes there are several clippings on one sheet that are rotated against each other). However, the quality of the full texts generated with typical OCR software decreases significantly if the input texts are not horizontal. Therefore, we have developed a Python script based on the open library OpenCV, which detects the direction (or angle) of a text block and then rotates the image back by this angle. See Figure 2 for a demonstration of the application of the script to two example images.

Text recognition using Tesseract

We used the open source software Tesseract OCR for text recognition, as Esperanto and other languages in the dataset are directly supported. Otherwise, Groundtruth texts would have had to be created manually in Esperanto to train the OCR software. Tesseract is called up with the following settings:

langs = 'fra+deu+Fraktur+frk+eng+spa+ita+epo+rus+nld+por+pol+ces+cat+swe+dan+hun+lat+bul+fin+isl+ron'tesseract -l {langs} --oem 1 --psm 1 {deskewed_path} {ocr_path}-deskewed.alto alto txt

Here deskewed_path stands for the file path of the image with straight text generated in the second step and ocr_path for the destination of the text data to be generated. We have thus created an ALTO XML file for each text box.

Creating IIIF manifests from ALTO-XML files

In the fourth and final step, the ALTO-XML files belonging to all text boxes on a page are combined into a single ALTO-XML file and this in turn is converted into IIIF-compliant annotations. A IIIF manifest is then created for each article with one or more images, each of which contains the text annotations in question.

3. Results

Entries in the ONB catalog

The metadata mentioned above has now also been integrated into the ONB catalog system as part of the project and can be found there using the search term “Hachette collection”. This also allows searches to be carried out using the catalog's usual filter methods and includes, in particular, a reference to the corresponding digital copies.

Solr full-text search

The main aim of the project was to make the full texts of the newspaper articles searchable. This has been done with the help of Solr and we offer a full-text search in this collection via the ONB Labs as a tool. In fact, the chosen implementation allows a combined search in all metadata (title, author, journal, date, place, language, keywords) as well as the full texts, including Solr's usual similarity search.

Dataset

We offer the data generated in the project as a bundled dataset here. In addition to the project results as a download, further information on the scope and content of the dataset can be accessed there. There is also rights information, a citation suggestion and the option to browse the dataset.

IIIF Manifests

In the spirit of interoperability and reusability, which was desired in the call for proposals, we have created a IIIF collection that contains all IIIF manifests generated in the project and thus allows access to all images, metadata and full texts. This collection can be found here.

4. Links and funding information

This project was supported by CLARIAH-AT. The website of the project at the funding body can be found here.

Contribute

The source code, as well as the training dataset and the generated model for layout analysis can be found in the project's Gitlab repository.