Colorized Postcards

Historical Postcards: Black & White vs. Color

At ONB Labs, we provide metadata for a data set of 34,846 digitised historical postcards, of which 27,179 are printed in black and white, and 7,667 are printed in color. You can browse through the dataset here. This dataset is part of the larger set of all historical postcards of the ONB, which can be accessed via the AKON platform. However, for the ONB Labs dataset we have only selected those postcards from the AKON dataset which are in the public domain.

As roughly three quarters of the postcards in our dataset are printed in black and white we asked ourselves if we could use an algorithm from computer vision to colorize them. As the color postcards subset is of ample size, we also had hopes to use machine learning methods to perform training on this subset. One difficulty for the project that was apparent before starting was the different color palette of historical postcards compared to modern photographs. The difference is probably due to aging processes and different printing methods being used for these postcards.

R. Zhang's colorization Project

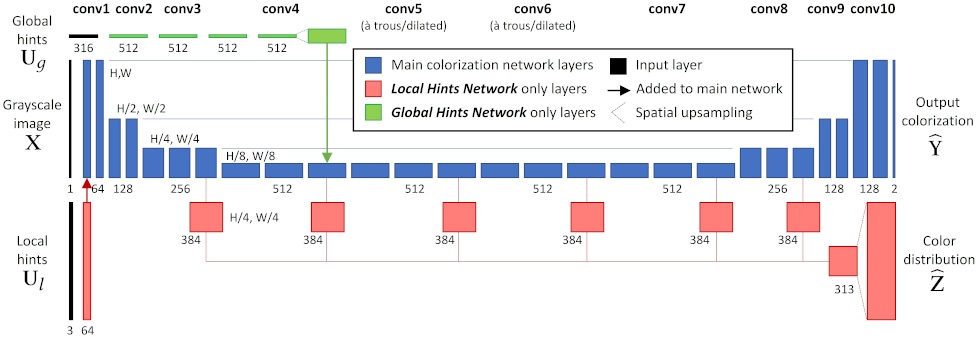

One of the open source projects that we came across is by Richard Zhang (research scientist at Adobe Research) and his collaborators that is based on two papers (see Colorful Image Colorization and Real-Time User-Guided Image Colorization with Learned Deep Priors). In both cases they use a Convolutional Neural Network (CNN, see the blue part in figure 2) to create a color image starting from a grayscale image. On GitHub you can find code to run a demo version of their program.

The two models presented in the two papers were trained on a very large training dataset containing over a million images. As the models work in Lab color space, any normal color image can be used for training: The model takes as input the L-channel (lightness) and predicts an ab-channel (color information) which can be compared to the ab-channel of the original image.

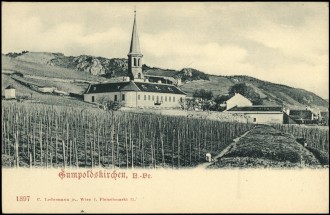

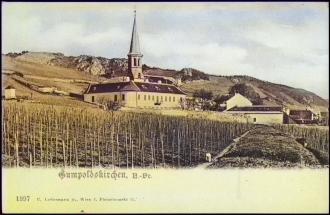

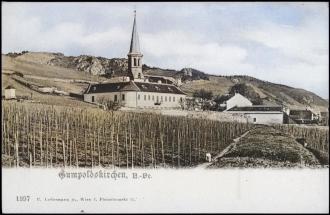

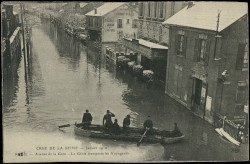

See figure 3 for a sample case of applying the two models (ECCV16 and SIGGRAPH17) of R. Zhang and collaborators to a black and white historical postcard. Typically, ECCV16 outputs a more colorful, saturated image, while SIGGRAPH17 more consistently outputs an image with believable colors at the cost of a more uniform color palette. Both models manage well to recognize skies and trees (and colorize them adequately), which are presumably well represented in the training data.

How to train your own network

We wanted to employ the method of transfer learning to adapt the SIGGRAPH17 model from above to the setting of historical postcards and train our own model. As a first step we built up our training data set: From the collection of all historical postcards (see here for the metadata of all postcards) we selected those with a color marker and downloaded them in 256 × 256 pixel resolution, as the models from above work with this resolution. See figure 4 for a sample view of our training data, altogether we used 7,667 images for training.

In the next step we initialized the SIGGRAPH17 model and fixed all its parameters except those in the 10th layer. Then we performed training only on the 10th layer using our training dataset, see our GitLab repository for the code that was used to train the network and to download the training data. To run the training in reasonable time (hours instead of days) we recommend using GPU hardware. You can use your own or, for example, run the Jupyter notebook in Google colab, which provides free (albeit limited) access to GPUs for training machine learning models.

Results

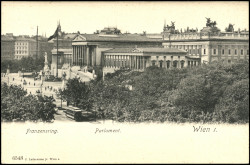

Finally we wanted to compare the output of the two models of R. Zhang et al. and the model that we trained ourselves on the collection of color postcards. See below (figure 5) for a sample of applying these models to black and white postcards.

As we start from black and white images, there is no objective metric to judge the quality of the colorized output (i.e., there is no ground truth for these images). However, humans can judge the plausibility of the color images. Overall, the images produced by our own network (or more precisely, by having re-trained the 10th layer of the SIGGRAPH17 network) have plausible colors, but they appear to have a historical tint with more green in the whole image. This is probably due to the many colored postcards in the training set with green landscapes on them.

Contribute

Did this demonstration arouse your interest in machine learning? Would you like to color in your own black and white images? Please do send us an email if you have any questions or feedback, we are looking forward to hearing from you!

The complete code base is available in the repository for this project on our public GitLab platform, containing (among various Python scripts) a Jupyter notebook that explains how to train your own network.